We report the development of GPT-4, a large-scale, multimodal model which can accept image and text inputs and produce text outputs. While less capable than humans in many real-world scenarios, GPT-4 exhibits human-level performance on various professional and academic benchmarks, including passing a simulated bar exam with a score around the top 10% of test takers.~OpenAi

When Is ChatGPT 4 Coming Out?

ChatGPT 4 was rolled out to the public on March 14-2023 and is limited to plus members.GPT-4 is available as an API for developers to build applications and services through the OpenAI API waiting list.

ChatGPT 4 vs GPT 3

During the presentation, some of the model’s amazing capabilities were shown which included:

• GPT-4 has a higher level of context

• It is a raw intelligence upgrade

• It is a multimodal, vision model

• It’s better at bridging the practical application gap

let’s break down this into short summaries:

When you ask chatgpt4 to summarize a block of text and only use words starting with a specific letter, it doesn’t strain at all. The model was able to summarize an OpenAI blog post with a single sentence with all letters starting with the letter “Q”. ChatGPT3 is unable to achieve this task, it simply struggles a little bit and eventually gives up.

In case you missed the demo catch it here: OpenAI GPT4 developer live stream.

With little help, the model was able to build a fully functional discord bot that was used in the rest of the presentation.

A cool thing about these models and precisely gpt4 is that you could grab the documentation of a certain service online eg: an API documentation and gpt4 will understand and rephrase everything using the latest update to give correct answers since it still has limited information about events that happened after 2021. (I think its always good to give it the latest up-to-date information)

Multimodal? What does it mean?

The second mindblowing thing is that ChatGPT4 can take in images, understand them and give responses in the context of input. This remains the single best thing, however, this feature is still not publicly available as it’s a partnership between OpenAI and another company.

GPT4 through the discord bot interface (that it made ) accepts the inputs ie:

An image

A command - you instruct the bot what to do with the image. “What is funny about this image? Describe it panel by panel.".

Here is an example:

User:

What is funny about this image? Describe it panel by panel.

GPT4:

The image shows a package for a “Lightning Cable” adapter with three panels.

Panel 1: A smartphone with a VGA connector (a large, blue, 15-pin connector typically used for computer monitors) plugged into its charging port.

Panel 2: The package for the “Lightning Cable” adapter with a picture of a VGA connector on it.

Panel 3: A close-up of the VGA connector with a small Lightning connector (used for charging iPhones and other Apple devices) at the end.

The humor in this image comes from the absurdity of plugging a large, outdated VGA connector into a small, modern smartphone charging port.

When did we get here? calm down.

The power of taking in images is definitely a huge step that is not limited to telling jokes. As they say, A picture is worth a thousand words, here is why.

We as developers thought our jobs are safe simply because it would take a client ages to explain what they want to a bot. This thesis will have to die completely.

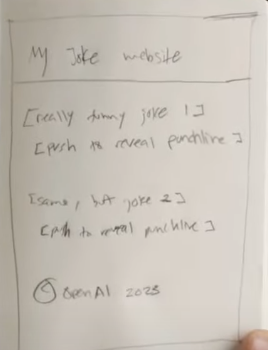

It seems AI has gone as far as understanding a doctor’s handwriting if doctors still write. Then turns whatever was written and sketched on paper into a functional bloody html/css and Javascript website.

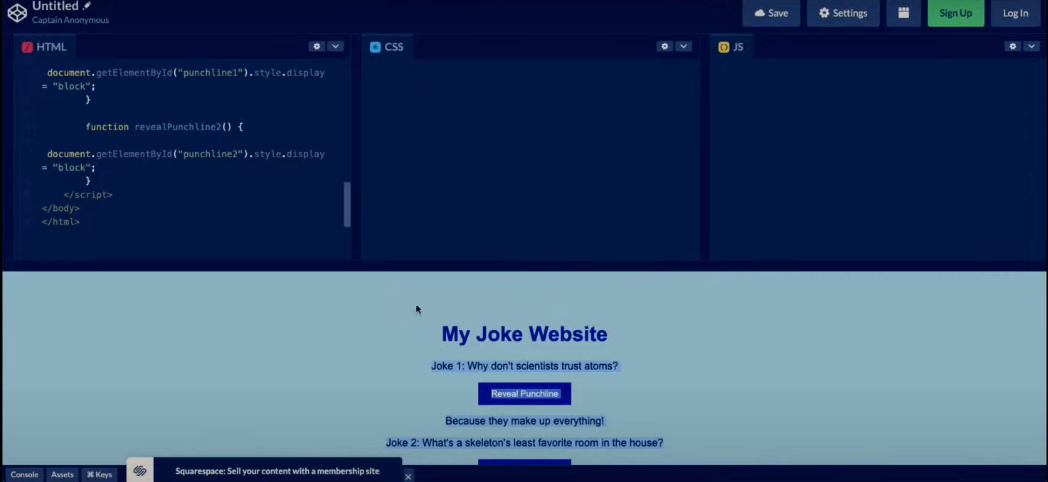

here is what exactly happened: The below mockup of the website was fed to the bot and it spitted some code which when ran on codepen revealed a fully functional jokes website. ….. from a doctor’s handwriting.

User:

Write brief HTML/JS to turn this mock-up into a colorful website, where the jokes are replaced by two real jokes

and these were the results: Hand drawn to a beautiful working website